After a two year effort, I have released the first edition of "The Linux Command Line" in all of its 522 page glory!

I want to give special thanks to Mark Polesky for his extraordinary review and test of the book, as well as the rest of the review team: Jesse Becker, Tomasz Chrzczonowicz, Michael Levin, and Spence Miner.

Special thanks also go out to Karen Shotts for editing all of my so-called English.

To download the free PDF version of the book, go to https://sourceforge.net/projects/linuxcommand/files/ and get the file TLCL-09.12.pdf.

You may also purchase a printed version of the book in large, easy-to-read format (which is, in fact, very handy) by going to: http://www.lulu.com/content/paperback-book/the-linux-command-line/7594184

Enjoy!

Monday, December 14, 2009

Thursday, November 19, 2009

The Linux Command Line - Fourth Draft Now Available

The fourth draft of the book is now available. This version contains almost all the the review feedback received so far and has been edited through the final chapter. This draft does not yet include an index, but is otherwise close to being finished.

The new draft, named TLCL-09.11.pdf, is available here.

Enjoy!

The new draft, named TLCL-09.11.pdf, is available here.

Enjoy!

Saturday, October 3, 2009

The Linux Command Line - Third Draft Now Available

The third draft is now available. This version features reformatted and captioned tables, some of the changes suggested by the review team (more to follow), and a number of small additions. It also includes the edited versions of the first 18 chapters.

If you are working on the review, please switch to this version. The new draft, named TLCL-09.10.pdf, is available here.

Thanks for your help!

If you are working on the review, please switch to this version. The new draft, named TLCL-09.10.pdf, is available here.

Thanks for your help!

Friday, August 14, 2009

The Linux Command Line - Second Draft Now Available

Hi Everyone,

I just posted the second draft of my book. This incorporates items from my "to do" list. It does not yet include any changes from the review team. Reviewers may switch to this version if they wish (just indicate that you are reviewing version 09.08 of the book) but may also continue with the first draft. The changes in this version are not extensive but it should read a little better. A few new items were added, and the table of contents now provides links to the individual chapters making navigation somewhat easier. Enjoy!

The new PDF is named TLCL-09.08.pdf and is available here.

I just posted the second draft of my book. This incorporates items from my "to do" list. It does not yet include any changes from the review team. Reviewers may switch to this version if they wish (just indicate that you are reviewing version 09.08 of the book) but may also continue with the first draft. The changes in this version are not extensive but it should read a little better. A few new items were added, and the table of contents now provides links to the individual chapters making navigation somewhat easier. Enjoy!

The new PDF is named TLCL-09.08.pdf and is available here.

Wednesday, July 22, 2009

I'm Looking For Reviewers

Sorry about my long absence, but as you will see, I have an excuse:

Hello All,

I have just finished the first draft of a book I'm writing titled, "The Linux Command Line" to be released under a Creative Commons license. With the initial writing completed, it's time for some editing and review. That means I'm looking for folks willing to perform some reviewing. In particular, I need technical reviewers who can gently point out my technical and historical errors, and I need less experienced users who can find areas where my explanations are unclear. Don't worry about grammar and spelling and such, I have a "real" editor for that. At this stage I need gurus and sample users to test this thing.

The book is fairly long (about 475 pages) so a good review will take some time and effort on the part of any volunteers. If you make a serious contribution, I will add your name to the list of contributors in the "Acknowledgments" section of the first chapter. Don't laugh, that's all I got for doing a technical review on O'Reilly's "Bash Cookbook."

Unlike Bash Cookbook however, my book will be freely distributable in PDF format but I am reserving the right to sell printed versions.

Please feel free to take a look at the draft. It can be downloaded from my Sourceforge site at:

http://downloads.sourceforge.net/sourceforge/linuxcommand/TLCL-09.07.pdf?use_mirror=master

If you decide that you'd like to help out with the review, let me know and I will provide further details.

Many thanks!

Hello All,

I have just finished the first draft of a book I'm writing titled, "The Linux Command Line" to be released under a Creative Commons license. With the initial writing completed, it's time for some editing and review. That means I'm looking for folks willing to perform some reviewing. In particular, I need technical reviewers who can gently point out my technical and historical errors, and I need less experienced users who can find areas where my explanations are unclear. Don't worry about grammar and spelling and such, I have a "real" editor for that. At this stage I need gurus and sample users to test this thing.

The book is fairly long (about 475 pages) so a good review will take some time and effort on the part of any volunteers. If you make a serious contribution, I will add your name to the list of contributors in the "Acknowledgments" section of the first chapter. Don't laugh, that's all I got for doing a technical review on O'Reilly's "Bash Cookbook."

Unlike Bash Cookbook however, my book will be freely distributable in PDF format but I am reserving the right to sell printed versions.

Please feel free to take a look at the draft. It can be downloaded from my Sourceforge site at:

http://downloads.sourceforge.net/sourceforge/linuxcommand/TLCL-09.07.pdf?use_mirror=master

If you decide that you'd like to help out with the review, let me know and I will provide further details.

Many thanks!

Thursday, April 9, 2009

"We're Linux" Video Finalists

The Linux Foundation has announced the finalists in their "We're Linux" competition. While I consider all the entries rather weak, my favorite is this one:

Project: Building An All-Text Linux Workstation - Part 7

Today, we will finish up with printing by taking a look at the command line tools provided by CUPS.

CUPS supports two different families of printer tools. The first, Berkley or LPD comes from the Berkley Software Distribution (BSD) version of Unix and the second is SysV from the System V version of Unix. Both families include comparable functionality, so choosing one over the other is really a matter of personal taste.

Setting A Default Printer

A printer can be set as the default printer for the system. This will make using the command line print tools easier. To do this we can either use the web-based interface to CUPS at http://localhost:631 or we can use the following command:

lpadmin -d printer_name

where printer_name is the name of a print queue we defined in Part 6 of the series.

Sending A Job To The Printer (Berkley-Style)

The lpr program is used to send a job to the printer. It can accept standard input or file name arguments. One of the neat things about CUPS is that it can accept many kinds of data formats and can (within reason) figure out how to print them. Typical formats include PostScript, PDF, text, and images such as JPEG.

Here we will print a directory listing in three column format to the default printer:

ls /usr/bin | pr -3 | lpr

To use a different printer, append -P printer_name to the lpr command. To see a list of available printers:

lpstat -a

Sending A Job To The Printer (SysV-Style)

The SysV print system uses the lp command to send jobs to the printer. It can be used just as lpr in our earlier example:

ls /usr/bin | pr -3 | lp

however, lp has a different set of options. For example to specify a printer, the -d (for destination) option is used. lp also supports many options for page formatting and printer control not found with the lpr command.

Examining Print Job Status

While a print job is being printed, you may monitor its progress with the lpq command. This will display a list of all the jobs queued for printing. Each print job is assigned a job number that can be used with to control the job.

Terminating Print Jobs

Either the lprm (Berkley) or cancel (SysV) commands can be used to remove a job from a printer queue. While the two commands have different option sets, either command followed by a print job number will terminate the specified job.

Getting Help

The following man pages cover the CUPS printing commands

lp lpr lpq lprm lpstat lpoptions lpadmin cancel lpmove

In addition, CUPS provides excellent documentation of the printing system commands in the help section of the online interface to the CUPS server at:

http://localhost:631/help

Select the "Getting Started" link and the "Command Line Printing And Options" topic.

A Follow Up On Part 4

Midnight Commander allows direct access to its file viewer and built in text editor. The mcview command can be used to view files and the mcedit command can be used to invoke the editor.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

CUPS supports two different families of printer tools. The first, Berkley or LPD comes from the Berkley Software Distribution (BSD) version of Unix and the second is SysV from the System V version of Unix. Both families include comparable functionality, so choosing one over the other is really a matter of personal taste.

Setting A Default Printer

A printer can be set as the default printer for the system. This will make using the command line print tools easier. To do this we can either use the web-based interface to CUPS at http://localhost:631 or we can use the following command:

lpadmin -d printer_name

where printer_name is the name of a print queue we defined in Part 6 of the series.

Sending A Job To The Printer (Berkley-Style)

The lpr program is used to send a job to the printer. It can accept standard input or file name arguments. One of the neat things about CUPS is that it can accept many kinds of data formats and can (within reason) figure out how to print them. Typical formats include PostScript, PDF, text, and images such as JPEG.

Here we will print a directory listing in three column format to the default printer:

ls /usr/bin | pr -3 | lpr

To use a different printer, append -P printer_name to the lpr command. To see a list of available printers:

lpstat -a

Sending A Job To The Printer (SysV-Style)

The SysV print system uses the lp command to send jobs to the printer. It can be used just as lpr in our earlier example:

ls /usr/bin | pr -3 | lp

however, lp has a different set of options. For example to specify a printer, the -d (for destination) option is used. lp also supports many options for page formatting and printer control not found with the lpr command.

Examining Print Job Status

While a print job is being printed, you may monitor its progress with the lpq command. This will display a list of all the jobs queued for printing. Each print job is assigned a job number that can be used with to control the job.

Terminating Print Jobs

Either the lprm (Berkley) or cancel (SysV) commands can be used to remove a job from a printer queue. While the two commands have different option sets, either command followed by a print job number will terminate the specified job.

Getting Help

The following man pages cover the CUPS printing commands

lp lpr lpq lprm lpstat lpoptions lpadmin cancel lpmove

In addition, CUPS provides excellent documentation of the printing system commands in the help section of the online interface to the CUPS server at:

http://localhost:631/help

Select the "Getting Started" link and the "Command Line Printing And Options" topic.

A Follow Up On Part 4

Midnight Commander allows direct access to its file viewer and built in text editor. The mcview command can be used to view files and the mcedit command can be used to invoke the editor.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Saturday, April 4, 2009

LinuxCommand.org (And Others) Under DDoS Attack

Since Thursday my domain registrar, register.com, has been under heavy distributed denial of service (DDoS) attack. This has, at times, made all or part of LinuxCommand.org unavailable. Here is the latest news:

Dear William,

Earlier today we communicated to you we were experiencing intermittent

service disruptions as a result of a distributed denial of service

(DDoS) attack – an intentionally malicious flooding of our systems

from various points across the internet.

We want to update you on where things stand.

Services have been restored for most of our customers including hosting

and email. However for some of our customers, services are not fully

restored. We know this is unacceptable.

We are using all available means to restore services to every one of

our customers and halt this criminal attack on our business and our

customers’ business. We are working round the clock to make that happen.

We are committed to updating you in as timely manner as possible,

please check your inbox or our website for additional updates.

Thank you for your patience.

Larry Kutscher

Chief Executive Officer

Register.com

Friday, April 3, 2009

Tip: Redirecting Multiple Command Outputs

Let's imagine a simple script:

Make A Separate Script

script1:

Write A Shell Function

We could take the basic idea of the separate script and incorporate it into a single script by making script1 into a shell function:

Make A List

We could construct a compound command using {} characters to enclose a list of commands:

Launch A Subshell

Finally, we could do this:

Enjoy!

#!/bin/bashSimple enough. It produces three lines of output:

echo 1

echo 2

echo 3

1Now let's say we wanted to redirect the output of the commands to a file named foo.txt. We could change the script as follows:

2

3

#!/bin/bashAgain, pretty straightforward, but what if we wanted to pipe the output of all three echo commands into less? We would soon discover that this won't work:

F=foo.txt

echo 1 >> $F

echo 2 >> $F

echo 3 >> $F

#!/bin/bashThis causes less to be executed three times. Not what we want. We want a single instance of less to input the results of all three echo commands. There are four approaches to this:

F=foo.txt

echo 1 | less

echo 2 | less

echo 3 | less

Make A Separate Script

script1:

#!/bin/bashscript2:

echo 1

echo 2

echo 3

#!/bin/bashBy running script2, script1 is also executed and its output is piped into less. This works but it's a little clumsy.

script1 | less

Write A Shell Function

We could take the basic idea of the separate script and incorporate it into a single script by making script1 into a shell function:

#!/bin/bashThis works too, but it's not the simplest way to do it.

# shell function

run_echoes () {

echo 1

echo 2

echo 3

}

# call shell function and redirect

run_echoes | less

Make A List

We could construct a compound command using {} characters to enclose a list of commands:

#!/bin/bashThe {} characters allow us to group the three commands into a single output stream. Note that the spaces between the {} and the commands, as well as the trailing semicolon after the third echo, are required.

{ echo 1; echo 2; echo 3; } | less

Launch A Subshell

Finally, we could do this:

#!/bin/bashPlacing the list inside () creates a subshell, or another copy of bash and it executes the commands. This has the same result as enclosing the list of commands within {} but with more overhead. The real reason we would want to do this is if, instead of just redirecting the output, we wanted to put all three commands in the background:

(echo 1; echo 2; echo 3) | less

#!/bin/bashThis doesn't make much sense for our echo commands (they execute too quickly to bother with), but if we have commands that take a long time to run, this technique can come in handy.

(echo 1; echo 2; echo 3) > foo.txt &

Enjoy!

Friday, March 27, 2009

Project: Building An All-Text Linux Workstation - Part 6

In this installment, we will tackle printing. If you recall from the second installment when we installed Debian on the system, we selected the "print server" group of packages to included with our installation. This installed CUPS (Common Unix Printing System) and related programs. This set of packages allows the system to print local print jobs and (if configured to do so) act as a print server to other systems on the local network.

Installing cups-pdf

One of the cool things we can do is install the cups-pdf package which supports a virtual printer that creates PDF files in lieu of actual printed output. After installation, we will take advantage of the package to demonstrate how to configure CUPS. Using apt-get we can install the package this way:

sudo apt-get install cups-pdf

Configuring CUPS

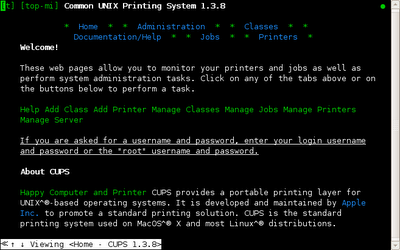

CUPS, by default, makes available a web-based configuration system. To access it, we will use w3m:

w3m http://localhost:631

which will open the web page on the local network interface using port 631. After the page opens, we will get a screen like this:

With this interface, you can configure:

At this point we are done. You may move to the "Home" link at the top of the page to repeat the process to add more printers.

That's all for this installment. Next time we will look at some printer commands that we can use with our new printing capability.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Installing cups-pdf

One of the cool things we can do is install the cups-pdf package which supports a virtual printer that creates PDF files in lieu of actual printed output. After installation, we will take advantage of the package to demonstrate how to configure CUPS. Using apt-get we can install the package this way:

sudo apt-get install cups-pdf

Configuring CUPS

CUPS, by default, makes available a web-based configuration system. To access it, we will use w3m:

w3m http://localhost:631

which will open the web page on the local network interface using port 631. After the page opens, we will get a screen like this:

With this interface, you can configure:

- Local USB, parallel port and virtual printers.

- Remote IPP (Internet Printing Protocol) printers. Cups will find them automatically if your network allows it.

- Remote SMB (Windows shared) printers.

- Using the tab key, move the cursor to the link labeled "Add Printer" and press enter.

- We will see a new page with some input fields. They are delimited with square bracket characters ([]). To enter data into the fields, move the cursor to the field and press enter. A text prompt will appear at the bottom of the screen.

- In the first field, "Name:" we will enter the name of the printer to be added. This name is like a file name and should be one word with no spaces. We will call our new printer, PDF. Type the letters PDF at the text prompt and press enter.

- Press the tab key to move to the next field, "Location:" and enter "localhost" using the text prompt.

- Press the tab key to move to the next field, "Description:" and enter "CUPS-PDF Virtual Printer."

- Press the tab key to move to the link labeled "Continue" and press enter. After a few seconds, we will see a new screen with a pull-down box of printer devices. Using the tab key move the cursor to the field labeled "Device:" and press enter. The contents of the list will appear.

- Using the arrow keys, select the entry "CUPS-PDF (Virtual PDF Printer)" and press enter.

- Press the tab key to move to the "Continue" link. Press enter.

- After a few seconds, a new screen will appear, again with a pull-down box. This box is for selecting drivers. The CUPS-PDF driver does not really need this, so open the box labeled "Make:" and select "Generic" and press enter.

- Move to the "Contiune" link and press enter.

- The next screen will appear and we can skip over the fields (they should already be correct.) Move the cursor to the link labeled "Add Printer" and press enter.

- You will briefly see a screen announcing that the printer has been successfully added. It will be followed by a page of printer options. If you need to change any, such as default paper size, do so now. When done, move to the link labeled "Set Printer Options" and press enter.

- The final screen contains a summary of the printer setup and contains a list of links for controlling the printer including printing a test page.

At this point we are done. You may move to the "Home" link at the top of the page to repeat the process to add more printers.

That's all for this installment. Next time we will look at some printer commands that we can use with our new printing capability.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Monday, March 23, 2009

Saturday, March 21, 2009

Project: Building An All-Text Linux Workstation - Part 5

In today's episode, we'll look at sudo and some text editing stuff. So fire up your text boxes and let's get going!

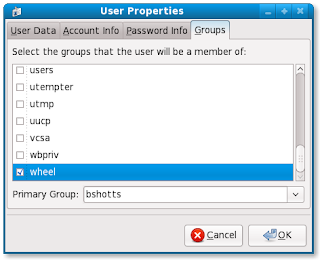

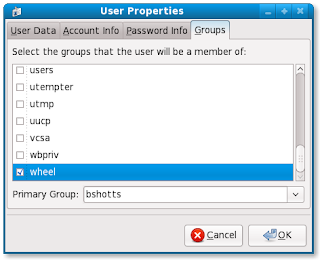

sudo

If you're an Ubuntu user, you probably already know a little about sudo. It's the command you use to get temporary administrative privileges for doing such things as editing configuration files and installing software. sudo allows users to enter their own password instead of the root password to perform privileged tasks. Further, sudo can be configured to allow specific users specific privileges. For example, a user can be allowed to only execute a specific command as the superuser.

sudo is invoked this way:

sudo command

where command is the command to be executed with escalated privileges.

One of the inconveniences on our all-text system is that we must either login as root or use the su command to shut the system down, since only the superuser is allowed to shutdown the system. A sensible precaution considering that Linux systems are designed to support multiple users at the same time.

To fix this problem, we'll install sudo on our system. We can do this with either aptitude or apt-get. As root, enter the following command:

apt-get install sudo

and the sudo package will be installed.

sudo is controlled by a configuration file named /etc/sudoers. The sudoers file is a little unique in that it wants to be edited only with the visudo program. You can actually edit it with any text editor, but the visudo program checks the syntax of the file to help prevent errors. This is important for any file with the security implications of sudoers.

To edit the file, we simply (as root again) enter the command:

visudo

and the following screen will appear:

Despite the name, the visudo program uses the nano text editor and not vi. This makes it much easier for new users. Our change to the sudoers file is very simple. Add this line in the section labled "User privilege specification" and then save the file and exit the editor by first typing ctrl-o and then ctrl-x.:

me ALL=(ALL) ALL

Substitute your user name for the name "me" and this configuration will allow you to execute any command with root privileges by entering your own password.

To allow a user of the system to only run the poweroff command (performs the same as shutdown but does not require the time to be specified), we could add this line to the file:

username ALL=(ALL) /sbin/poweroff

where username is the name of the user.

nano

As we have seen, our Debian system has the nano text editor installed by default. nano is a clone of an earlier editor named pico that is included with the pine email program. pine has some license issues that prevents it from being included with some Linux distributions so a replacement version of its editor was developed.

To use nano, type the following:

nano textfile

where textfile is the name of a file to edit.

As text-based editors go, it's pretty easy to use. It provides a small list of commands at the bottom of the screen. Pressing the ctrl-g key brings up the help screen which displays all of its commands. nano does support the mouse. See the nano man page for details.

nano uses a global configuration file named /etc/nanorc and, if available, a local configuration file named ~/.nanorc. To make an initial copy of the local configuration file, copy the global file to your home directory:

me@linuxbox:~$ cp /etc/nanorc ~/.nanorc

One useful change we can make to the configuration file is the activation of syntax highlighting. This can be done by removing comments from several lines at the end of the file. The last section of the configuration file, the "Color setup" section, has a number of "include" statements which load syntax definitions. To enable syntax highlighting support, remove the comment symbol from the beginning of the line. Change lines like this:

## Bourne shell scripts

#include "/usr/share/nano/sh.nanorc"

to:

## Bourne shell scripts

include "/usr/share/nano/sh.nanorc"

for as many languages as you wish to support.

Syntax highlighting is automatically activated based on the file name extension of the file being edited. For example, if we edit a file named foo.html and the HTML syntax definition has been included in the .nanorc file, the foo.html file will be displayed with syntax highlighting when loaded. The colors can be toggled on and off by pressing alt-y. This presents a problem for shell scripts, however as most do not have a file extension in their name. This can be solved in one of two ways. First, add the extension ".sh" to the file name, or second, invoke nano with the -Y sh option, which forces the selection of the sh highlighting. It might be a good idea to add an alias such as:

alias nano-sh='nano -Y sh'

to your .bashrc file to make this more convenient.

vim

Real Linux users, of course, don't use nano. They use vim, the enhanced replacement for the traditional Unix editor, vi. The version of vim installed by default on our system is called vim-tiny, a subset of the full package. I recommend installing the full vim package. This can be done with apt-get like so:

apt-get install vim

I'm not going to cover vim in this posting as it would take too much space (it consumed a rather chapter in my upcoming book) but there is a pretty good on-line book (in PDF format) that describes it in detail. See The Vim Book. Seriously, to be a real Linux person you should take the time to learn it. I know its not easy, but you'll really enjoy lording it over the other Linux newbies once you do.

Well, that's all for this time. See you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

sudo

If you're an Ubuntu user, you probably already know a little about sudo. It's the command you use to get temporary administrative privileges for doing such things as editing configuration files and installing software. sudo allows users to enter their own password instead of the root password to perform privileged tasks. Further, sudo can be configured to allow specific users specific privileges. For example, a user can be allowed to only execute a specific command as the superuser.

sudo is invoked this way:

sudo command

where command is the command to be executed with escalated privileges.

One of the inconveniences on our all-text system is that we must either login as root or use the su command to shut the system down, since only the superuser is allowed to shutdown the system. A sensible precaution considering that Linux systems are designed to support multiple users at the same time.

To fix this problem, we'll install sudo on our system. We can do this with either aptitude or apt-get. As root, enter the following command:

apt-get install sudo

and the sudo package will be installed.

sudo is controlled by a configuration file named /etc/sudoers. The sudoers file is a little unique in that it wants to be edited only with the visudo program. You can actually edit it with any text editor, but the visudo program checks the syntax of the file to help prevent errors. This is important for any file with the security implications of sudoers.

To edit the file, we simply (as root again) enter the command:

visudo

and the following screen will appear:

Despite the name, the visudo program uses the nano text editor and not vi. This makes it much easier for new users. Our change to the sudoers file is very simple. Add this line in the section labled "User privilege specification" and then save the file and exit the editor by first typing ctrl-o and then ctrl-x.:

me ALL=(ALL) ALL

Substitute your user name for the name "me" and this configuration will allow you to execute any command with root privileges by entering your own password.

To allow a user of the system to only run the poweroff command (performs the same as shutdown but does not require the time to be specified), we could add this line to the file:

username ALL=(ALL) /sbin/poweroff

where username is the name of the user.

nano

As we have seen, our Debian system has the nano text editor installed by default. nano is a clone of an earlier editor named pico that is included with the pine email program. pine has some license issues that prevents it from being included with some Linux distributions so a replacement version of its editor was developed.

To use nano, type the following:

nano textfile

where textfile is the name of a file to edit.

As text-based editors go, it's pretty easy to use. It provides a small list of commands at the bottom of the screen. Pressing the ctrl-g key brings up the help screen which displays all of its commands. nano does support the mouse. See the nano man page for details.

nano uses a global configuration file named /etc/nanorc and, if available, a local configuration file named ~/.nanorc. To make an initial copy of the local configuration file, copy the global file to your home directory:

me@linuxbox:~$ cp /etc/nanorc ~/.nanorc

One useful change we can make to the configuration file is the activation of syntax highlighting. This can be done by removing comments from several lines at the end of the file. The last section of the configuration file, the "Color setup" section, has a number of "include" statements which load syntax definitions. To enable syntax highlighting support, remove the comment symbol from the beginning of the line. Change lines like this:

## Bourne shell scripts

#include "/usr/share/nano/sh.nanorc"

to:

## Bourne shell scripts

include "/usr/share/nano/sh.nanorc"

for as many languages as you wish to support.

Syntax highlighting is automatically activated based on the file name extension of the file being edited. For example, if we edit a file named foo.html and the HTML syntax definition has been included in the .nanorc file, the foo.html file will be displayed with syntax highlighting when loaded. The colors can be toggled on and off by pressing alt-y. This presents a problem for shell scripts, however as most do not have a file extension in their name. This can be solved in one of two ways. First, add the extension ".sh" to the file name, or second, invoke nano with the -Y sh option, which forces the selection of the sh highlighting. It might be a good idea to add an alias such as:

alias nano-sh='nano -Y sh'

to your .bashrc file to make this more convenient.

vim

Real Linux users, of course, don't use nano. They use vim, the enhanced replacement for the traditional Unix editor, vi. The version of vim installed by default on our system is called vim-tiny, a subset of the full package. I recommend installing the full vim package. This can be done with apt-get like so:

apt-get install vim

I'm not going to cover vim in this posting as it would take too much space (it consumed a rather chapter in my upcoming book) but there is a pretty good on-line book (in PDF format) that describes it in detail. See The Vim Book. Seriously, to be a real Linux person you should take the time to learn it. I know its not easy, but you'll really enjoy lording it over the other Linux newbies once you do.

Well, that's all for this time. See you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Wednesday, March 11, 2009

Project: Building An All-Text Linux Workstation - Part 4

In this installment, we will explore web browsing and install a couple of packages to help with file management.

A Text Web Browser?

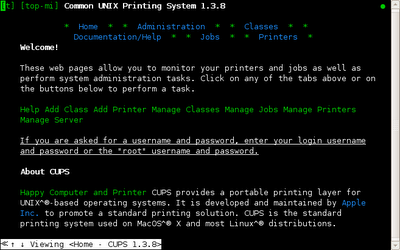

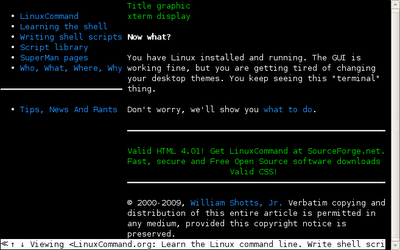

Yes, there are such things. In fact there are several available for Linux. Our Debian workstation has one installed by default. Called w3m, it is a full featured browser that operates in text mode. So what can you do with it? Well, we won't be watching YouTube with it, that's for sure, but many well written web sites (especially those designed to follow acceptable standards of accessibility) will render just fine. We can try it out:

me@linuxbox:~$ w3m linuxcommand.org

and after a few seconds we will see this:

The arrow keys will navigate, and the tab key will advance from link to link. Shift-h will bring up the help screens and shift-b will perform a "back" function (this will get you out of help too). Press the q key to quit w3m. The program can do tabbed browsing, render tables and has a number of command line tricks. This blog renders fine too, so you can now follow along directly on our all-text system.

The arrow keys will navigate, and the tab key will advance from link to link. Shift-h will bring up the help screens and shift-b will perform a "back" function (this will get you out of help too). Press the q key to quit w3m. The program can do tabbed browsing, render tables and has a number of command line tricks. This blog renders fine too, so you can now follow along directly on our all-text system.

Unfortunately, there is a bug that prevents w3m from using the mouse on the console to help with navigation. Fortunately, there are other browsers that you can install. See the link at the end of this article.

Automatically Mounting USB Devices

If we insert a USB flash drive into our system, we will see a kernel message appear on the screen. This is because the kernel sends its messages to the console in the hopes that an ever-vigilant operator (that's you) is paying attention. However this message means very little as it only announces the fact that the kernel has detected a device attached to the USP port. It does not mean that the system has done anything useful, like mounting the device. We could manually mount the device, that that is a nuisance.

To solve this problem we will install a package called usbmount that can automatically mount USB devices. We can do this using aptitude. Just search for the "usbmount" package and install it. We described the process in installment 3.

After the package is installed, we must modify its configuration file to allow support for VFAT file systems, the type most often used on USB drives. As a precaution, usbmount does not enable VFAT since the kernel does not fully support the "sync" mount option on VFAT file systems. Normally, usbmount allows USB devices to removed without unmounting. It does this by keeping file systems "synchronized," that is, it immediately writes changes to the device versus waiting to consolidate multiple write for improved performance. With VFAT enabled, the user must issue a sync command before removing a USB VFAT device.

After the package is installed, we need to (as root) edit the /etc/usbmount/usbmount.conf file and change the following two lines:

FILESYSTEMS="ext2 ext3"

to:

FILESYSTEMS="ext2 ext3 vfat"

and:

FS_MOUNTOPTIONS=""

to:

FS_MOUNTOPTIONS="-fstype=vfat,gid=floppy,dmask=0007,fmask=0117"

After the configuration file is modified, usbmount will automatically mount VFAT devices. You will find the mount point in the /media directory. When a flash drive is inserted we can verify the mount using df:

me@linuxbox:~$ sync

Midnight Commander

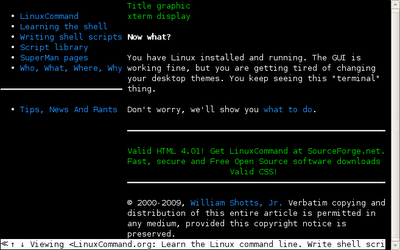

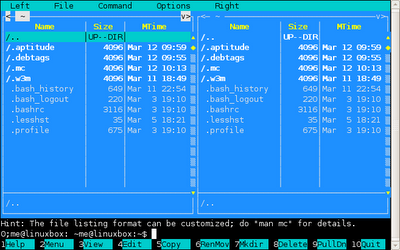

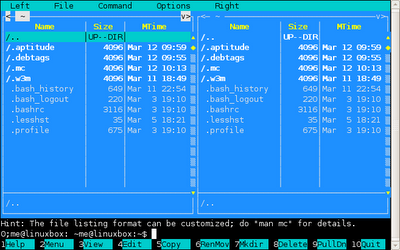

The last package we will install in this episode is Midnight Commander, a text based file manager. Using aptitude, install the mc package and the following additional packages that are recommended: arj, bzip2, odt2txt, unzip, and zip. If you prefer, you can use apt-get to install the packages as the mc package is a little hard to find using aptitude:

linuxbox:~# apt-get install mc arj bzip2 odt2txt unzip zip

After mc is installed we can fire it up:

me@linuxbox:~$ mc

and the following screen will appear:

The numbered blocks along the bottom of the screen correspond to the function keys, F1-F10 and permit access to many of the programs functions and it has a lot of them! Unlike w3m, the mouse is well supported by mc.

That's all for this installment. While you're waiting for Part 5, study Midnight Commander. It has a help function and a man page. That should keep you busy for a while! Also, if you are interested in other text-based web browsers, check out the Text Mode Browser Roundup from Linux Journal.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

A Text Web Browser?

Yes, there are such things. In fact there are several available for Linux. Our Debian workstation has one installed by default. Called w3m, it is a full featured browser that operates in text mode. So what can you do with it? Well, we won't be watching YouTube with it, that's for sure, but many well written web sites (especially those designed to follow acceptable standards of accessibility) will render just fine. We can try it out:

me@linuxbox:~$ w3m linuxcommand.org

and after a few seconds we will see this:

The arrow keys will navigate, and the tab key will advance from link to link. Shift-h will bring up the help screens and shift-b will perform a "back" function (this will get you out of help too). Press the q key to quit w3m. The program can do tabbed browsing, render tables and has a number of command line tricks. This blog renders fine too, so you can now follow along directly on our all-text system.

The arrow keys will navigate, and the tab key will advance from link to link. Shift-h will bring up the help screens and shift-b will perform a "back" function (this will get you out of help too). Press the q key to quit w3m. The program can do tabbed browsing, render tables and has a number of command line tricks. This blog renders fine too, so you can now follow along directly on our all-text system.Unfortunately, there is a bug that prevents w3m from using the mouse on the console to help with navigation. Fortunately, there are other browsers that you can install. See the link at the end of this article.

Automatically Mounting USB Devices

If we insert a USB flash drive into our system, we will see a kernel message appear on the screen. This is because the kernel sends its messages to the console in the hopes that an ever-vigilant operator (that's you) is paying attention. However this message means very little as it only announces the fact that the kernel has detected a device attached to the USP port. It does not mean that the system has done anything useful, like mounting the device. We could manually mount the device, that that is a nuisance.

To solve this problem we will install a package called usbmount that can automatically mount USB devices. We can do this using aptitude. Just search for the "usbmount" package and install it. We described the process in installment 3.

After the package is installed, we must modify its configuration file to allow support for VFAT file systems, the type most often used on USB drives. As a precaution, usbmount does not enable VFAT since the kernel does not fully support the "sync" mount option on VFAT file systems. Normally, usbmount allows USB devices to removed without unmounting. It does this by keeping file systems "synchronized," that is, it immediately writes changes to the device versus waiting to consolidate multiple write for improved performance. With VFAT enabled, the user must issue a sync command before removing a USB VFAT device.

After the package is installed, we need to (as root) edit the /etc/usbmount/usbmount.conf file and change the following two lines:

FILESYSTEMS="ext2 ext3"

to:

FILESYSTEMS="ext2 ext3 vfat"

and:

FS_MOUNTOPTIONS=""

to:

FS_MOUNTOPTIONS="-fstype=vfat,gid=floppy,dmask=0007,fmask=0117"

After the configuration file is modified, usbmount will automatically mount VFAT devices. You will find the mount point in the /media directory. When a flash drive is inserted we can verify the mount using df:

me@linuxbox:~$ df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/hda1 18856292 980140 16918280 6% /

tmpfs 160096 0 160096 0% /lib/init/rw

udev 10240 88 10152 1% /dev

tmpfs 160096 0 160096 0% /dev/shm

/dev/sda1 15560 5944 9616 39% /media/usb0

and we see that the drive has been mounted on /media/usb0. Just remember to use the sync command before removing the device, or really bad things may happen to the drive:me@linuxbox:~$ sync

Midnight Commander

The last package we will install in this episode is Midnight Commander, a text based file manager. Using aptitude, install the mc package and the following additional packages that are recommended: arj, bzip2, odt2txt, unzip, and zip. If you prefer, you can use apt-get to install the packages as the mc package is a little hard to find using aptitude:

linuxbox:~# apt-get install mc arj bzip2 odt2txt unzip zip

After mc is installed we can fire it up:

me@linuxbox:~$ mc

and the following screen will appear:

The numbered blocks along the bottom of the screen correspond to the function keys, F1-F10 and permit access to many of the programs functions and it has a lot of them! Unlike w3m, the mouse is well supported by mc.

That's all for this installment. While you're waiting for Part 5, study Midnight Commander. It has a help function and a man page. That should keep you busy for a while! Also, if you are interested in other text-based web browsers, check out the Text Mode Browser Roundup from Linux Journal.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Monday, March 9, 2009

Dvorak Likes Linux

I never thought that I would live long enough to see it, but John C. Dvorak, professional curmudgeon likes Ubuntu!

Thursday, March 5, 2009

Interview With Steve Bourne, Creator Of sh

Computerworld has a very interesting interview with Steve Bourne, the author of sh (the ancestor of bash) where he talks about the history of the Unix shell. A good read for you Unix history buffs out there.

Wednesday, March 4, 2009

Project: Building An All-Text Linux Workstation - Part 3

In this installment we'll learn how to navigate the text environment and install our first packages.

If you have gotten through parts 1 and 2 of our series, you now have a sparkling new Linux system that displays -- a prompt! But if you think it only displays one prompt, you'd be wrong, as we shall soon see.

Let's fire up our system and log in again as the root user.

One of the commands I often use is locate, which rapidly searches a small database of files installed on the system. locate is installed on our system but when we try to use it we get the following message:

linuxbox:~# locate foo

locate: can not open `/var/lib/mlocate/mlocate.db': No such file or directory

This message appears when you try to use the locate command before the database is created. Normally the database is rebuilt each night by a cron job, but unless you let the machine run overnight, the database will never get built. We'll fix this problem in a little bit, but to solve the problem in the meantime, we'll run the database update program manually:

linuxbox:~# updatedb

After it completes, locate should start to work. If you are unfamiliar with this wonderful utility, now is a good time to look at its man page.

If we enter the command:

linuxbox:~# locate foo

We will get back a long list of filenames containing the string "foo." Go ahead and do this. Notice how the list scrolls off the top of the screen. Next, type shift-PageUp and notice how we are able to scroll up the list. Shift-PageDown scrolls downward.

The terminal screen is only 80 characters wide but sometimes a command will output lines wider than 80 characters. This will usually cause the text to wrap but in some rare instances it will actually go off the edge of the screen. In these cases, you can scroll the screen sideways by using the shift-right arrow and shift-left arrow.

To see the next keyboard trick, type Alt-F2 and you will see a new login prompt. Actually you are seeing another virtual terminal you can log into. Go ahead and log in using your ordinary user name and password. Now try the who command:

As we can see, we have two users logged in. Type Alt-F3 and we'll see another virtual terminal. In fact, on our system, Alt-F1 through F6 provide separate terminals we can use. You can use Alt-right and left arrows to rapidly cycle through them.

Wouldn't It Be Great If The Mouse Worked?

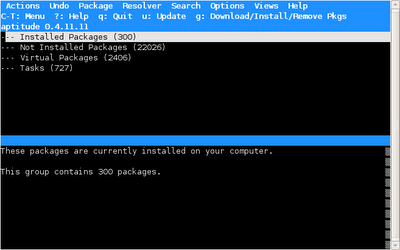

Let's go back to the first terminal session where root is logged in by typing Alt-F1. Then start up the aptitude program:

linuxbox:~# aptitude

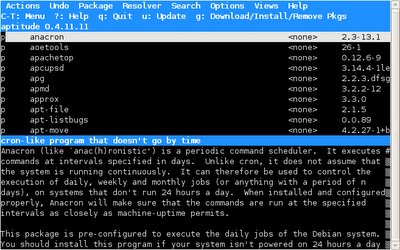

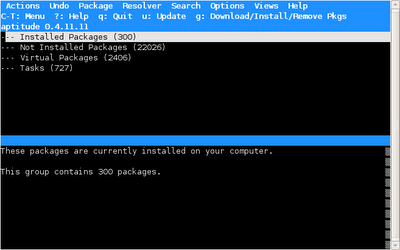

aptitude is a fancy character based way to install packages on Debian and other Debian-based distributions. It has many features and is a handy way to manage packages on our system. The apt-get program is also available. aptitude features a multi-pane screen:

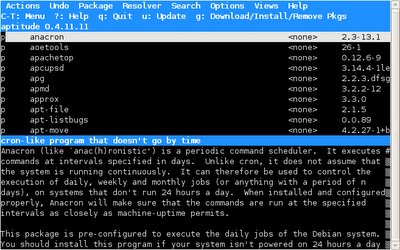

It took me a few minutes to figure out the user interface, but after installing a few packages with it, I figured it out. We're going to use aptitude to install a couple of packages. We'll use its search feature to help us out. Type / and a search prompt should appear. Enter "anacron" and it should find the package in its database.

You can use the tab key to toggle between the two display panes. With the package name highlighted, press the + key to mark the package for installation. Next, let's do another search, this time for "gpm". If it does not find it the first time, type "n" to search for the next occurrence. Repeat until it finds a package named simply gpm. This use of / (search) and n (next) is the same as the less program. Again, type + to mark the package for installation.

With our two packages selected, it's time to install them. We do this by typing "g" (for "go".) aptitude will display a summary of the actions it is about to take and by pressing "g" a second time, the installation will commence. When installing gpm for the first you will see an error message about being unable to shut down the daemon. This may be safely ignored.

When the aptitude screen returns, type "q" to quit.

We have now installed anacron, which will make sure that periodic tasks (like running updatedb) take place even if the machine is not run continuously. We also installed gpm which should make the mouse work. Some of the programs that we will install in upcoming installments can use a mouse, but its most useful feature is that we can now use the mouse to copy and paste text, just like we can on the X display. The only difference is that the right button is used to paste rather than the middle button.

You can terminate your extra terminal sessions by typing "exit" and root can shut down the machine by:

linuxbox:~# shutdown -h now

That's all for today. See you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

If you have gotten through parts 1 and 2 of our series, you now have a sparkling new Linux system that displays -- a prompt! But if you think it only displays one prompt, you'd be wrong, as we shall soon see.

Let's fire up our system and log in again as the root user.

One of the commands I often use is locate, which rapidly searches a small database of files installed on the system. locate is installed on our system but when we try to use it we get the following message:

linuxbox:~# locate foo

locate: can not open `/var/lib/mlocate/mlocate.db': No such file or directory

This message appears when you try to use the locate command before the database is created. Normally the database is rebuilt each night by a cron job, but unless you let the machine run overnight, the database will never get built. We'll fix this problem in a little bit, but to solve the problem in the meantime, we'll run the database update program manually:

linuxbox:~# updatedb

After it completes, locate should start to work. If you are unfamiliar with this wonderful utility, now is a good time to look at its man page.

If we enter the command:

linuxbox:~# locate foo

We will get back a long list of filenames containing the string "foo." Go ahead and do this. Notice how the list scrolls off the top of the screen. Next, type shift-PageUp and notice how we are able to scroll up the list. Shift-PageDown scrolls downward.

The terminal screen is only 80 characters wide but sometimes a command will output lines wider than 80 characters. This will usually cause the text to wrap but in some rare instances it will actually go off the edge of the screen. In these cases, you can scroll the screen sideways by using the shift-right arrow and shift-left arrow.

To see the next keyboard trick, type Alt-F2 and you will see a new login prompt. Actually you are seeing another virtual terminal you can log into. Go ahead and log in using your ordinary user name and password. Now try the who command:

me@linuxbox ~$ who root tty1 2009-03-04 13:49 me tty2 2009-03-04 14:28

As we can see, we have two users logged in. Type Alt-F3 and we'll see another virtual terminal. In fact, on our system, Alt-F1 through F6 provide separate terminals we can use. You can use Alt-right and left arrows to rapidly cycle through them.

Wouldn't It Be Great If The Mouse Worked?

Let's go back to the first terminal session where root is logged in by typing Alt-F1. Then start up the aptitude program:

linuxbox:~# aptitude

aptitude is a fancy character based way to install packages on Debian and other Debian-based distributions. It has many features and is a handy way to manage packages on our system. The apt-get program is also available. aptitude features a multi-pane screen:

It took me a few minutes to figure out the user interface, but after installing a few packages with it, I figured it out. We're going to use aptitude to install a couple of packages. We'll use its search feature to help us out. Type / and a search prompt should appear. Enter "anacron" and it should find the package in its database.

You can use the tab key to toggle between the two display panes. With the package name highlighted, press the + key to mark the package for installation. Next, let's do another search, this time for "gpm". If it does not find it the first time, type "n" to search for the next occurrence. Repeat until it finds a package named simply gpm. This use of / (search) and n (next) is the same as the less program. Again, type + to mark the package for installation.

With our two packages selected, it's time to install them. We do this by typing "g" (for "go".) aptitude will display a summary of the actions it is about to take and by pressing "g" a second time, the installation will commence. When installing gpm for the first you will see an error message about being unable to shut down the daemon. This may be safely ignored.

When the aptitude screen returns, type "q" to quit.

We have now installed anacron, which will make sure that periodic tasks (like running updatedb) take place even if the machine is not run continuously. We also installed gpm which should make the mouse work. Some of the programs that we will install in upcoming installments can use a mouse, but its most useful feature is that we can now use the mouse to copy and paste text, just like we can on the X display. The only difference is that the right button is used to paste rather than the middle button.

You can terminate your extra terminal sessions by typing "exit" and root can shut down the machine by:

linuxbox:~# shutdown -h now

That's all for today. See you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Monday, March 2, 2009

Linuxcommand.org Terminal Screenshot Colour Scheme

I recently received this email from inquisitive reader Dermot:

Thanks Dermot for taking the time to write. The "screen shots" you refer to aren't actually screen shots at all. They're implemented in hand-coded HTML and CSS, but you can create the same effect on your own system. Here's how:

The contents of your prompt are defined in an shell variable called PS1. You can examine its contents like this:

me@linuxbox ~$ echo $PS1

${debian_chroot:+($debian_chroot)}\u@\h:\w\$

This example is from an Ubuntu system. Other distributions will be different.

To set the colors, first use the Edit -> Current Profile dialog in gnome-terminal to set the color scheme to "White on black":

Next, you need to add some ANSI color codes to the prompt string contained in PS1. To change the prompt to green and then back to its original colors (so that subsequent text will remain white), you need to change the prompt string to this:

Next, you need to add some ANSI color codes to the prompt string contained in PS1. To change the prompt to green and then back to its original colors (so that subsequent text will remain white), you need to change the prompt string to this:

\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\]

You can test your prompt by setting the PS1 variable this way:

me@linuxbox ~$ PS1="\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\] "

After you are satisfied with the new prompt design, you can make it permanent by adding these two lines to your .bashrc file:

PS1="\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\] "

export PS1

Hope this helps!

You can read more about configuring the prompt at the Linux Documentation Project. They have an excellent HOWTO document entitled:

The Bash-Prompt HOWTO

Hi

I was going through your website http://linuxcommand.org tonight and find it really easy to follow how the commands and descriptions are laid out. I have a question about the screenshots you use.

The terminal screenshots have a black background, with "[me@llinuxbox me}$" in green and the commands to be run in white. How did you get this colour scheme? Ive been asking on Ubuntuforums and other forums, as it would really make the lines on which you enter commands stand out against the output results of those commands.

Please get back to me if you get a chance.

Thanks

Dermot

Ireland

Thanks Dermot for taking the time to write. The "screen shots" you refer to aren't actually screen shots at all. They're implemented in hand-coded HTML and CSS, but you can create the same effect on your own system. Here's how:

The contents of your prompt are defined in an shell variable called PS1. You can examine its contents like this:

me@linuxbox ~$ echo $PS1

${debian_chroot:+($debian_chroot)}\u@\h:\w\$

This example is from an Ubuntu system. Other distributions will be different.

To set the colors, first use the Edit -> Current Profile dialog in gnome-terminal to set the color scheme to "White on black":

Next, you need to add some ANSI color codes to the prompt string contained in PS1. To change the prompt to green and then back to its original colors (so that subsequent text will remain white), you need to change the prompt string to this:

Next, you need to add some ANSI color codes to the prompt string contained in PS1. To change the prompt to green and then back to its original colors (so that subsequent text will remain white), you need to change the prompt string to this:\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\]

You can test your prompt by setting the PS1 variable this way:

me@linuxbox ~$ PS1="\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\] "

After you are satisfied with the new prompt design, you can make it permanent by adding these two lines to your .bashrc file:

PS1="\033[0;32m${debian_chroot:+($debian_chroot)}\u@\h:\w\$\[\033[0m\] "

export PS1

Hope this helps!

You can read more about configuring the prompt at the Linux Documentation Project. They have an excellent HOWTO document entitled:

The Bash-Prompt HOWTO

Friday, February 27, 2009

Project: Building An All-Text Linux Workstation - Part 2

In this episode, we will choose the Linux distribution and perform the installation.

Choosing A Distribution

After some consideration, I selected Debian 5.0 for our workstation project for three reasons:

With that decision out of the way, we next decide on the installation media. I chose the "netinst" (also called the "minimal CD") option. This is a downloadable CD image that contains a minimal base system. Additional packages are installed from the Internet. The install image is 150 MB, much smaller than the full install CDs. To use this option you must have a working network connection. If you need other installation options, take a look at the Debian Installation Guide for guidance.

You can download the installation image from here.

The last step is creating the installation CD. I will assume that your present system, Linux or otherwise is capable of that and that you know how to do it.

Installation

With our machine ready, it's time to boot up with our install CD. After the CD boots you will receive an attractive Debian splash screen listing various install options. For our purposes, select "Install", not "Graphical Install".

The installer is pretty easy to use and in most cases the default selections are fine. The arrow keys move from selection to selection as do the tab/shift-tab keys. The space bar is used to toggle the contents of check boxes.

A lot of boot messages should scroll by after the reboot and you will see at the very bottom a login prompt like this:

Debian GNU/Linux 5.0 linuxbox tty1

linuxbox login:

Enter the name root and then the root password and we will see a prompt like this:

linuxbox: ~#

Enter the command shutdown -h now and the machine will shutdown.

We're done for today.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Choosing A Distribution

After some consideration, I selected Debian 5.0 for our workstation project for three reasons:

- Debian is fairly simple on a conceptional level. Good, straightforward design with good documentation. It's also capable of being installed on very small machines. Its minimum memory requirement is only 44 MB.

- Its package repository is huge. With over 22,000 packages, we are likely to find a good selection of text based applications for our project.

- It just came out and I wanted to play with it ;-)

With that decision out of the way, we next decide on the installation media. I chose the "netinst" (also called the "minimal CD") option. This is a downloadable CD image that contains a minimal base system. Additional packages are installed from the Internet. The install image is 150 MB, much smaller than the full install CDs. To use this option you must have a working network connection. If you need other installation options, take a look at the Debian Installation Guide for guidance.

You can download the installation image from here.

The last step is creating the installation CD. I will assume that your present system, Linux or otherwise is capable of that and that you know how to do it.

Installation

With our machine ready, it's time to boot up with our install CD. After the CD boots you will receive an attractive Debian splash screen listing various install options. For our purposes, select "Install", not "Graphical Install".

The installer is pretty easy to use and in most cases the default selections are fine. The arrow keys move from selection to selection as do the tab/shift-tab keys. The space bar is used to toggle the contents of check boxes.

- When the prompt appears for disk partitioning, select "Guided - Use entire disk" and "All files in one partition".

- At the "Set up users and passwords" screen, you will be prompted to set the password for the root account. If you have been using Ubuntu up to this point, I have to explain that Debian, like most Linux distributions has a discreet root account rather than using sudo for everything as Ubuntu does. Much of the early work we will perform on the system will require root access. Choose a root password that is both strong, and one that you can easily remember.

- Next, you will be prompted to create your personal account. In keeping with LinuxCommand tradition, I named my machine "Linuxbox" and created a user account named "me". You, of course, can use any name you like.

- At the "Select and install software" screen you will be presented with a group of check boxes for different sets of packages to install. Using the space bar, select the "Print server" group and unselect the "Desktop environment" group.

- If you have installed the system using the entire disk as suggested above, answer "yes" to the prompt on the "Install the GRUB boot loader on the hard disk" screen to install GRUB in the disk's master boot record (MBR).

A lot of boot messages should scroll by after the reboot and you will see at the very bottom a login prompt like this:

Debian GNU/Linux 5.0 linuxbox tty1

linuxbox login:

Enter the name root and then the root password and we will see a prompt like this:

linuxbox: ~#

Enter the command shutdown -h now and the machine will shutdown.

We're done for today.

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Wednesday, February 25, 2009

Project: Building An All-Text Linux Workstation - Part 1

When I see an old PC in the trash, I have a strong urge to rescue and adopt it like a stray puppy. My wife, of course, has very effective ways of constraining my behavior in this regard, so I don't have nearly as many computers as I want. I hate seeing computers go to waste. I figure if the processor and the power supply are still working, the computer should be doing something. So what if it can't run the latest version of Windows? It can still run Linux!

Over the next few weeks, I will show you how to take an old, slow computer and make it into a text-only Linux workstation with surprising capabilities, including document production, email, instant messaging, audio playback, USENET news, calendaring, and, yes, even web browsing.

Why would anyone want to build a text-only workstation? I don't know. Because we can! And besides, it's a great way to learn a bunch of command line stuff and that's why you're here, right?

So if you want to play along, find yourself a computer with a least the following:

Good luck and I will see you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Over the next few weeks, I will show you how to take an old, slow computer and make it into a text-only Linux workstation with surprising capabilities, including document production, email, instant messaging, audio playback, USENET news, calendaring, and, yes, even web browsing.

Why would anyone want to build a text-only workstation? I don't know. Because we can! And besides, it's a great way to learn a bunch of command line stuff and that's why you're here, right?

So if you want to play along, find yourself a computer with a least the following:

- Pentium processor or above

- 64 MB or more of RAM

- 2 GB or larger hard disk

- PS/2 or USB mouse

- A PCI Network card (no ISA or wireless please)

Good luck and I will see you again soon!

Further Reading

Other installments in this series: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Monday, February 23, 2009

Bash 4.0 Released Today

Version 4.0 of everyone's favorite shell program was released today. I imagine this will be showing up in new Linux distributions soon. A copy of the release announcement is here.

Friday, February 20, 2009

The Unix (Linux) Frame Of Mind

If you are migrating from Windows to Linux, one of the barriers you will encounter (besides the myriad superficial differences in the user interface) is the whole "culture thing."

Using a Unix-like operating system involves more than learning where the buttons are located on the desktop. It requires a different way of thinking about your computer. On top of that, Linux requires that you learn about your relationship with the Free and open source software communities. That will be the subject, no doubt, of many future postings, but for now we'll talk about the Unix frame of mind.

To understand Unix, you have to understand its historical context. Unix certainly has its faults, but if you understand its history, you can see that many of its "features" are due to the state of computing during its formative years. But it's also important to realize that just because something is old does not mean that it is necessarily bad. Take for instance, the command line interface. If you listen to pundits and the PC magazine writers, you would think that the command line is the equivalent of waterboarding to the modern computer user. I liken use of a graphical user interface to watching television and the command line to reading a book. They are very different, but both are valid (and valuable) if used in the proper way.

So what is "the Unix frame of mind?" It is based on some the following principles:

The computer is multi-user. Unix was designed for "big" computers. Big, expensive computers that supported many users at the same time. This has a number of implications. For one, it meant a different security model. On a multi-user computer, it is essential that one user cannot trash another user, or the entire system. It also implies that communities can form among the computer's users. If you have Fedora or any of the other Red Hat family of Linux distributions, you may have noticed that it runs sendmail by default. This is part of the Unix tradition. Users on a system send email to each other. The first form of instant messaging was the write command which sent a text message to another user's terminal session.

Automate everything. Back in the 1960's IBM used a marketing slogan, "Machines should work. People should think." Yet today, we have millions of office workers sitting in front of PCs pumping their mice all day. Why? It's because the computer is not doing the work. The human is. The Unix way is to instruct the computer how to do the work and then forget about it. Back in the 1990s I marveled at the cron daemon. It could perform tasks on a scheduled basis. I remember leaving my PC on all night and letting it do the work I needed and having a summary of the results each morning. It did the work so I didn't have to.

Everyone programs. The computer is a tool, or rather, it is a huge collection of tools that can be arranged to perform work. To do this, you must program. You have to break your tasks into simple steps and arrange the tools to perform them. Unix actually makes this fairly simple. You can create pipelines of commands or write shell scripts. Have you noticed how, over the years, Windows has removed all the programming tools from its system? This keeps the users helpless. If they want a solution, they have to go out and buy it. By contrast, every major Linux distribution includes the shell, perl, python, text editors and thousands of tools for building solutions. If you need more, there is just about every programming tool under the sun available for immediate download.

A computer is not "easy to use." That is a marketing myth. A computer is no more easy to use than a violin, but with much study and practice, both can produce beautiful music.

It all depends on your frame of mind.

Every Linux user should learn something about the history of Unix. The Wikipedia is a good place to start:

http://en.wikipedia.org/wiki/Unix

Also check out Eric Raymond's The Art Of Unix Programming:

http://www.faqs.org/docs/artu/

Using a Unix-like operating system involves more than learning where the buttons are located on the desktop. It requires a different way of thinking about your computer. On top of that, Linux requires that you learn about your relationship with the Free and open source software communities. That will be the subject, no doubt, of many future postings, but for now we'll talk about the Unix frame of mind.

To understand Unix, you have to understand its historical context. Unix certainly has its faults, but if you understand its history, you can see that many of its "features" are due to the state of computing during its formative years. But it's also important to realize that just because something is old does not mean that it is necessarily bad. Take for instance, the command line interface. If you listen to pundits and the PC magazine writers, you would think that the command line is the equivalent of waterboarding to the modern computer user. I liken use of a graphical user interface to watching television and the command line to reading a book. They are very different, but both are valid (and valuable) if used in the proper way.

So what is "the Unix frame of mind?" It is based on some the following principles:

The computer is multi-user. Unix was designed for "big" computers. Big, expensive computers that supported many users at the same time. This has a number of implications. For one, it meant a different security model. On a multi-user computer, it is essential that one user cannot trash another user, or the entire system. It also implies that communities can form among the computer's users. If you have Fedora or any of the other Red Hat family of Linux distributions, you may have noticed that it runs sendmail by default. This is part of the Unix tradition. Users on a system send email to each other. The first form of instant messaging was the write command which sent a text message to another user's terminal session.

Automate everything. Back in the 1960's IBM used a marketing slogan, "Machines should work. People should think." Yet today, we have millions of office workers sitting in front of PCs pumping their mice all day. Why? It's because the computer is not doing the work. The human is. The Unix way is to instruct the computer how to do the work and then forget about it. Back in the 1990s I marveled at the cron daemon. It could perform tasks on a scheduled basis. I remember leaving my PC on all night and letting it do the work I needed and having a summary of the results each morning. It did the work so I didn't have to.

Everyone programs. The computer is a tool, or rather, it is a huge collection of tools that can be arranged to perform work. To do this, you must program. You have to break your tasks into simple steps and arrange the tools to perform them. Unix actually makes this fairly simple. You can create pipelines of commands or write shell scripts. Have you noticed how, over the years, Windows has removed all the programming tools from its system? This keeps the users helpless. If they want a solution, they have to go out and buy it. By contrast, every major Linux distribution includes the shell, perl, python, text editors and thousands of tools for building solutions. If you need more, there is just about every programming tool under the sun available for immediate download.

A computer is not "easy to use." That is a marketing myth. A computer is no more easy to use than a violin, but with much study and practice, both can produce beautiful music.

It all depends on your frame of mind.

Every Linux user should learn something about the history of Unix. The Wikipedia is a good place to start:

http://en.wikipedia.org/wiki/Unix

Also check out Eric Raymond's The Art Of Unix Programming:

http://www.faqs.org/docs/artu/

Wednesday, February 18, 2009

A Brief History Of Printing

The following is an excerpt from my upcoming book, "The Linux Command Line" due out later this year.

To fully understand the printing features found in Unix-like operating systems, we first have to learn some history. Printing on Unix-like systems goes way back to the beginning of operating system itself. In those days, printers, and the way they were used was much different than today.

Printing In The Dim Times

Like the computers themselves, printers in the pre-PC era tended to be large, expensive and centralized. The typical computer user of 1980 worked at a terminal connected to a computer some distance away. The printer was located near the computer and was under the watchful eyes of the computer’s operators.

When printers were expensive and centralized, as they often were in the early days of Unix, it was common practice for many users to share a printer. To identify print jobs belonging to a particular user, a banner page was often printed at the beginning of each print job displaying the name of the user. The computer support staff would then load up a cart containing the day’s print jobs and deliver them to the individual users.

Character-based Printers

The printer technology of that period was very different in two respects. First, printers of that period were almost always impact printers. Impact printers use a mechanical mechanism to strike a ribbon against the paper to form character impressions on the page. Two of the popular technologies of that time were daisy wheel printing and dot-matrix printing.

The second, and more important characteristic of early printers was that printers used a fixed set of characters that were intrinsic to the device itself. For example, a daisy wheel printer could only print the characters actually molded into the petals of the daisy wheel. This made printers of the period much like high-speed typewriters. As with most typewriters, they printed using monospaced (fixed width) fonts. This means that each character has the same width. Printing was done at fixed positions on the page and the printable area of a page contained a fixed number of characters. Printers, depending on the model, most likely printed ten characters per inch (CPI) horizontally and six lines per inch (LPI) vertically. Using this scheme, a US letter sheet of paper is eighty-five characters wide and sixty-six lines high. Taking into account a small margin on each side, eighty characters was considered the maximum width of a print line. This explains why terminal displays (and our terminal emulators) are normally eighty characters wide. It’s to provide a WYSIWYG (What You See Is What You Get) view of printed output using a monospaced font.

Data is sent to a typewriter-like printer in a simple stream of bytes containing the characters to be printed. For example, to print an “a”, the ASCII character code 97 is sent. In addition, the low-numbered ASCII control codes provided a means of moving the printer’s carriage and paper using codes for carriage return, line feed, form feed and the like. Using the control codes, it’s possible to achieve some limited font effects such as bold face by having the printer print a character, backspace and print the character again to get a darker print impression on the page.

Graphical Printers

The development of GUIs lead to major changes in printer technology. As computers moved to more picture-based displays, so to did printing move from character-based to graphical techniques. This was facilitated by the advent of the low-cost laser printer which, instead of printing fixed characters, could print tiny dots anywhere in the printable area of the page. This made printing proportional fonts (like those used by typesetters) and even photographs and high quality diagrams possible.

However, moving from a character-based scheme to a graphical scheme presented a formidable technical challenge. Here’s why: the number of bytes needed to fill a page using a character-based printer can be calculated this way (assuming sixty lines per page each containing eighty characters):

60 X 80 = 4800 bytes

whereas, a three hundred dot per inch (DPI) laser printer (assuming a eight by ten inch print area per page) requires:

(8 X 300) X (10 X 300) / 8 = 900000 bytes

The need to send nearly one megabyte of data per page to fully utilize a laser printer was more than many of the slow PC networks could handle, so it was clear that a clever invention was needed.